AR + Smartphone Data Visualization App

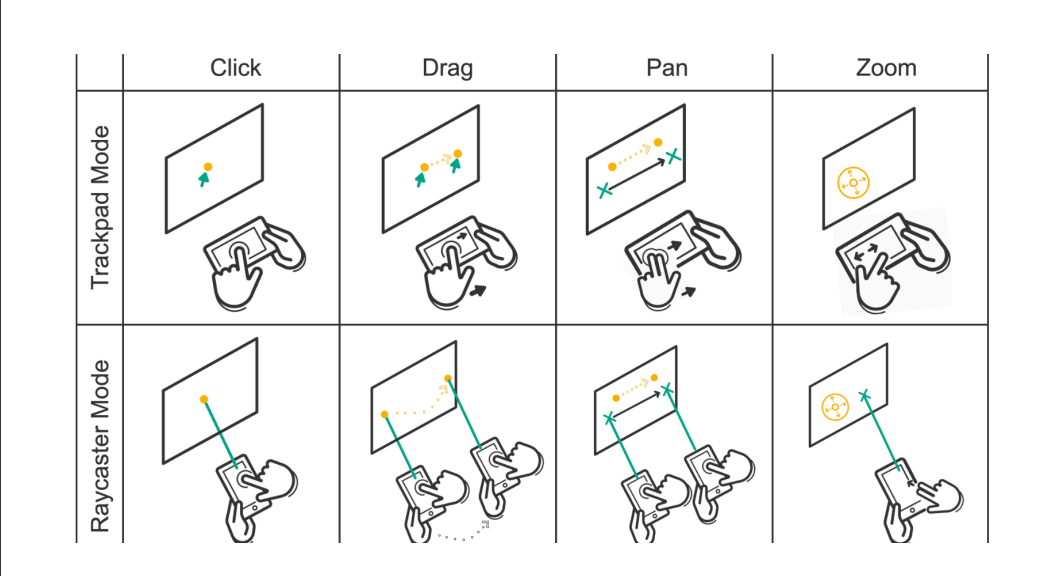

Intrigued by how AR connects our inner and outer worlds, I collaborated on a project to integrate a Microsoft HoloLens with a Google Pixel phone to replace traditional mid-air gestures with a user-friendly mobile app interface. The goal of this project was to make human interactions in an AR environment more accessible and less physically demanding by replacing mid-air gestures.

My Role: Program the four interactions in C#, pick a C# style guide, create JSON test files, review code scripts, and write-up weekly documentation for the project.

Status: Completed report, Youtube Tutorial, Presented Poster, Github

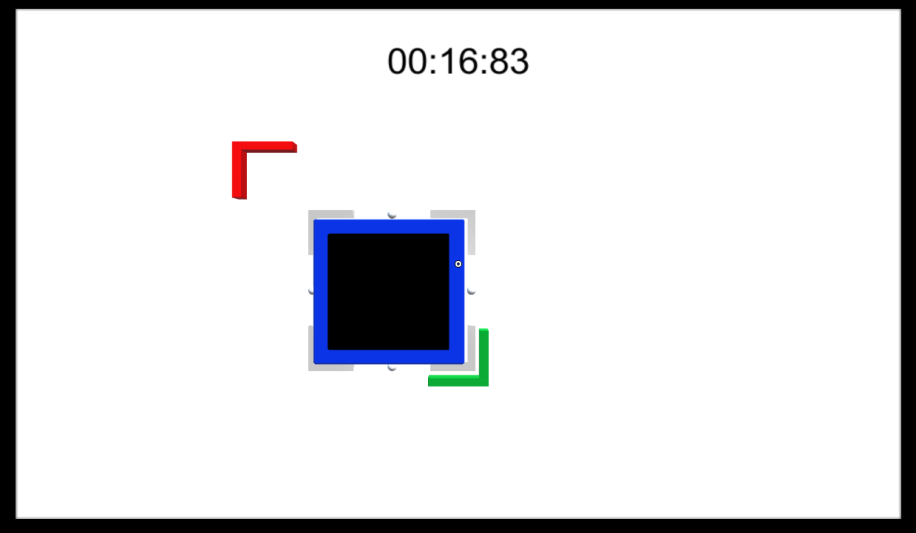

Prototype - Mark Up

Implementation

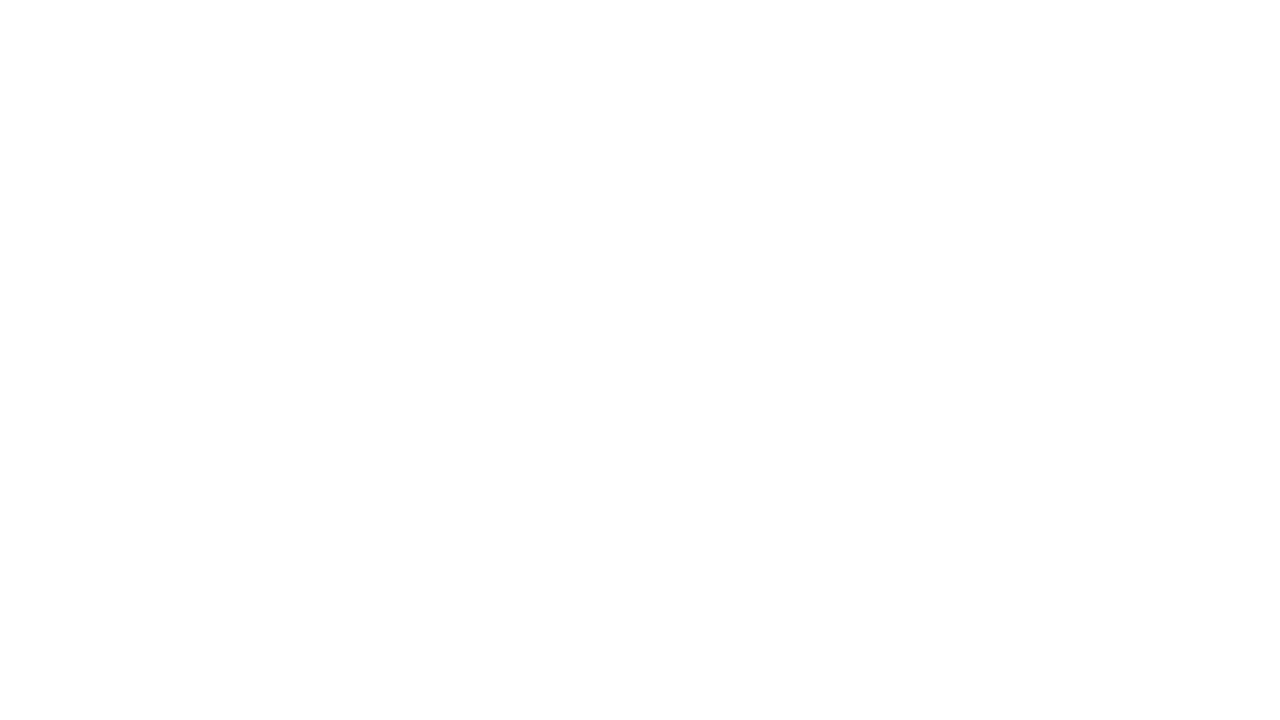

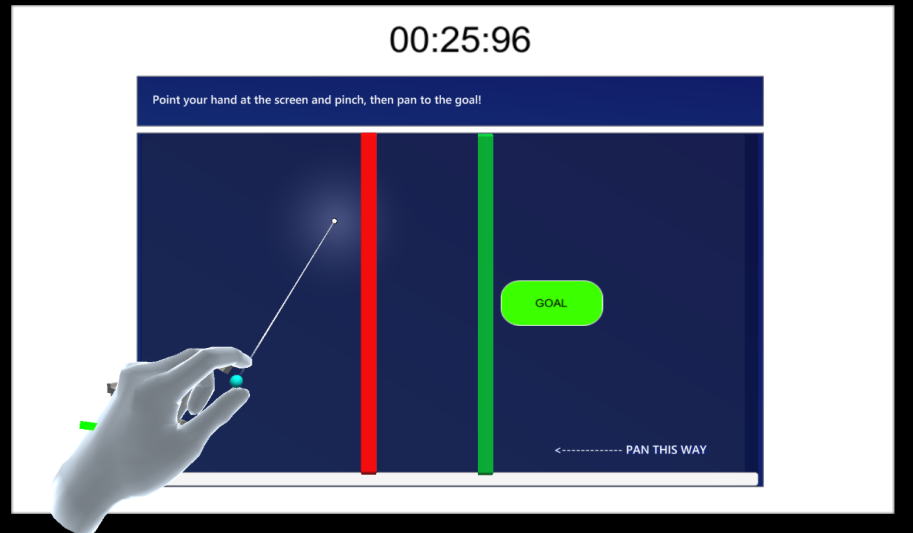

Our goal was to enable multiple modalities, such as trackpad, raycaster, and hand gestures for each interaction.

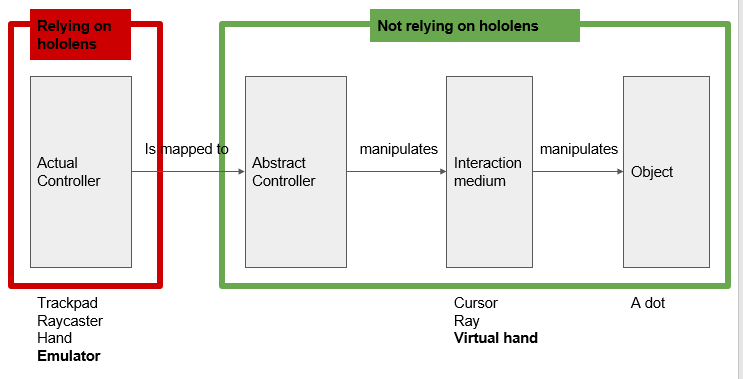

I contributed to programming C# scripts for drag, pan, zoom, and click interactions in Unity, allowing users to perform these actions through the mobile app. I also contributed to creating JSON test files for each interaction task that appeared on the screen.

Home Page

Click & Drag

Zoom

Pan

Tutorial

Click

Drag

Pan

Zoom

Poster

Presented Poster At:

Arizona State University's Capstone Showcase, Tempe, Arizona, April 2023. Student, S. “AR + Smartphone Data Visualization App'' (poster).

Future/Challenges/Learnings: AR, deeply rooted in human experience, offers immense potential to explore the connection between personal perception and the external environment by incorporating the senses into interactive systems. A key challenge, however, is gaining access to these systems to build programming expertise and customize them for diverse projects. I aim to gain more hands-on experience with spatial computing to create responsive, real-time technologies that interpret and adapt to users dynamically.